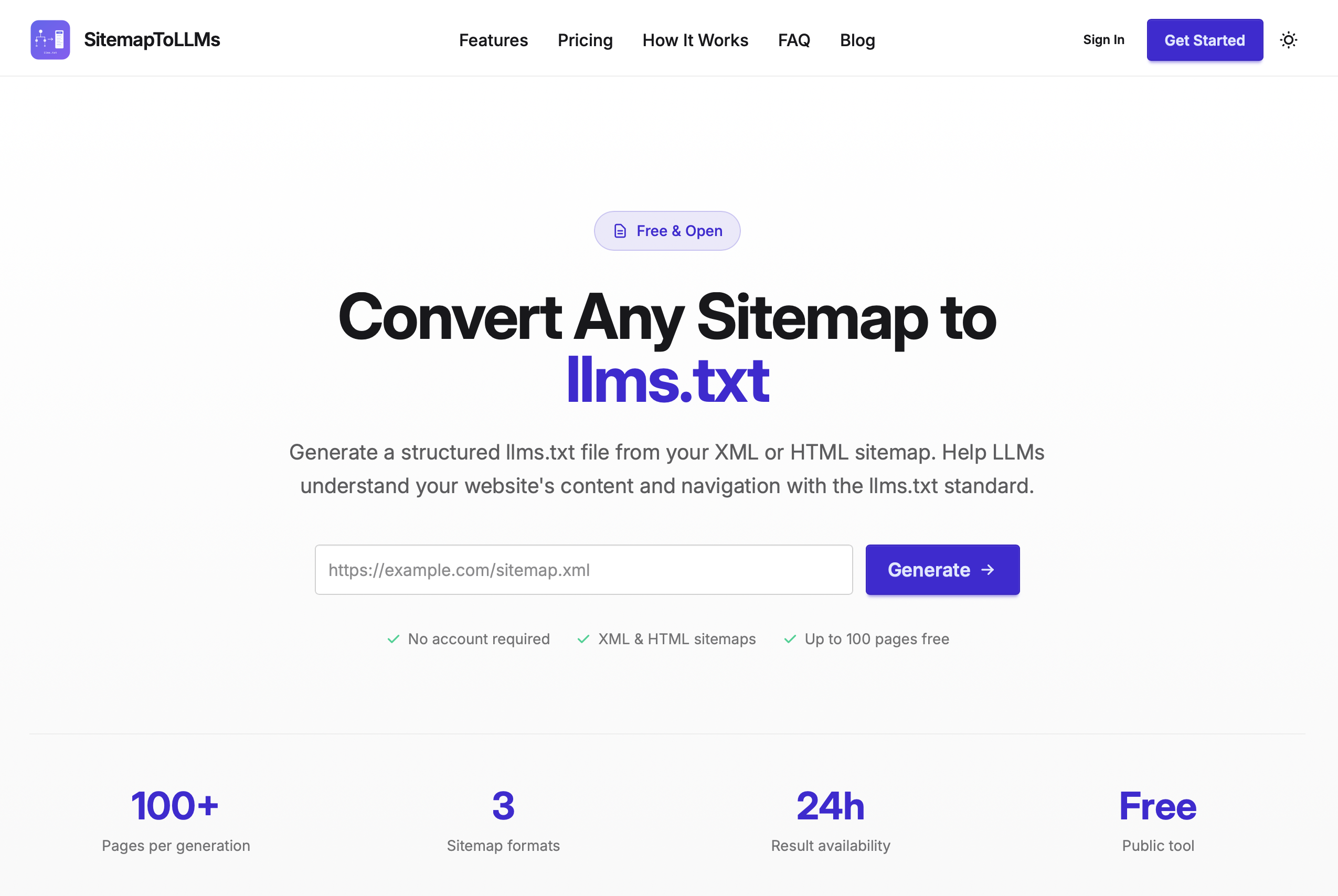

Revolutionize Your Content Discovery with SitemapToLLMs

As large language models (LLMs) become a primary channel for content discovery and recommendation, websites need a structured way to communicate their content to AI systems.

The llms.txt specification provides exactly that — a machine-readable file at your site root that describes your site’s structure, purpose, and key pages.

SitemapToLLMs automates the generation of llms.txt files by converting your existing XML or HTML sitemap into a semantically organised, priority-sorted document designed for LLM consumption.

Automated LLM Integration

Effortlessly connect your website to AI systems using llms.txt files.

Enhanced Content Visibility

Boost your site’s presence in AI-driven recommendations.

The Problem

Search engines have had robots.txt, sitemap.xml, and structured data for decades.

LLMs do not.

When an AI model attempts to understand your website, it works with raw HTML, inconsistent metadata, and incomplete signals. The result is partial understanding and, in some cases, hallucinated context.

The llms.txt format introduces a standardised file that describes:

-

What the site is and what it does

-

Which pages exist and how they’re organised

-

Brief descriptions of each page

-

Logical grouping into meaningful sections

However, manually creating and maintaining this file is impractical for most websites. Content evolves. New pages are added. Old pages are removed. A static llms.txt file quickly becomes outdated — and misleading.

SitemapToLLMs solves this by automating the entire process.

Explore Capabilities

How It Works

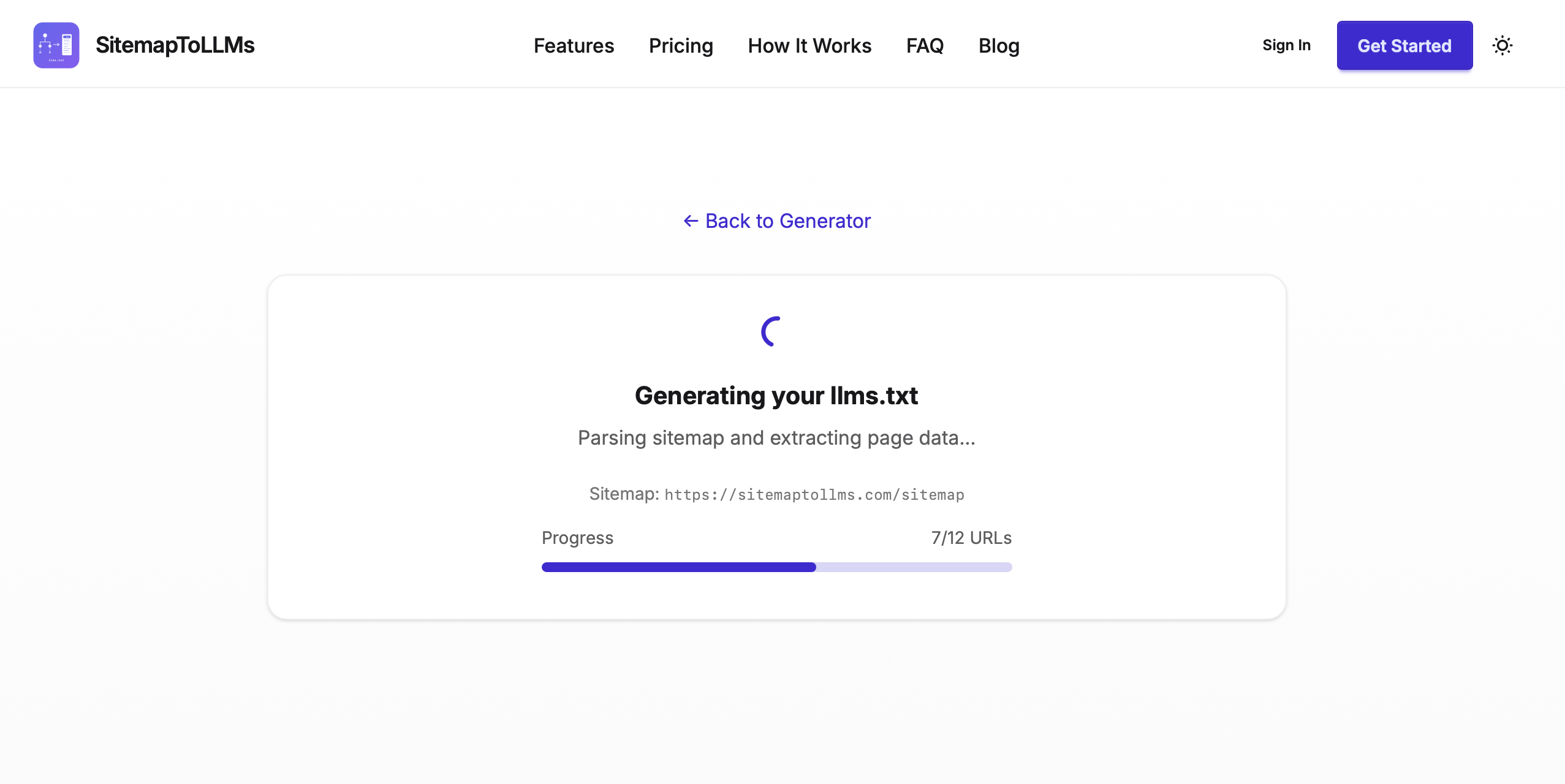

SitemapToLLMs takes your sitemap URL as input and produces a structured llms.txt as output. The generation pipeline consists of several stages:

Sitemap Parsing

The service accepts:

-

XML sitemaps conforming to the sitemaps.org protocol

-

Sitemap index files

-

HTML sitemap pages

For XML sitemaps, <loc> entries are parsed recursively.

For HTML sitemaps, links are extracted from the page structure.

Intelligent URL Filtering

Not every URL in your sitemap belongs in llms.txt.

The generator applies two layers of filtering:

Path-based exclusions

-

Authentication routes (

/login,/register,/forgot-password) -

Admin and dashboard pages

-

App-specific or internal routes

Pattern-based exclusions

-

WordPress archives (

/author/,/tag/, date archives) -

Pagination URLs

-

Low-value duplicate patterns

This ensures the output focuses on meaningful content pages rather than technical or utility routes.

Page Content Extraction

For each remaining URL, the service retrieves and extracts:

-

Page title (from

<title>or<h1>) -

Description (meta description, Open Graph tags, or first meaningful paragraph)

-

URL path structure for grouping logic

The fetcher uses a dedicated user agent:

SitemapToLLMsTxt/1.0

(+https://sitemaptollms.com)

Rate limiting is enforced to prevent excessive load on target servers.

Semantic Section Grouping

Pages are grouped based on URL path structure.

Rather than exposing raw slugs, the system applies semantic transformation:

-

/blog/getting-started-with-apis→ Blog -

/docs/api-reference→ Docs -

/terms,/privacy,/cookies→ Legal

Slug-to-name conversion handles common abbreviations (API, PHP, HTML, SEO, LLM, CSS, etc.) and generates readable section headings.

Priority-Based Ordering

Sections are ordered by relevance, not alphabetically.

-

Homepage and primary content appear first

-

Secondary sections follow

-

Utility sections such as Legal appear last

This mirrors how a human would summarise a site: most important information first.

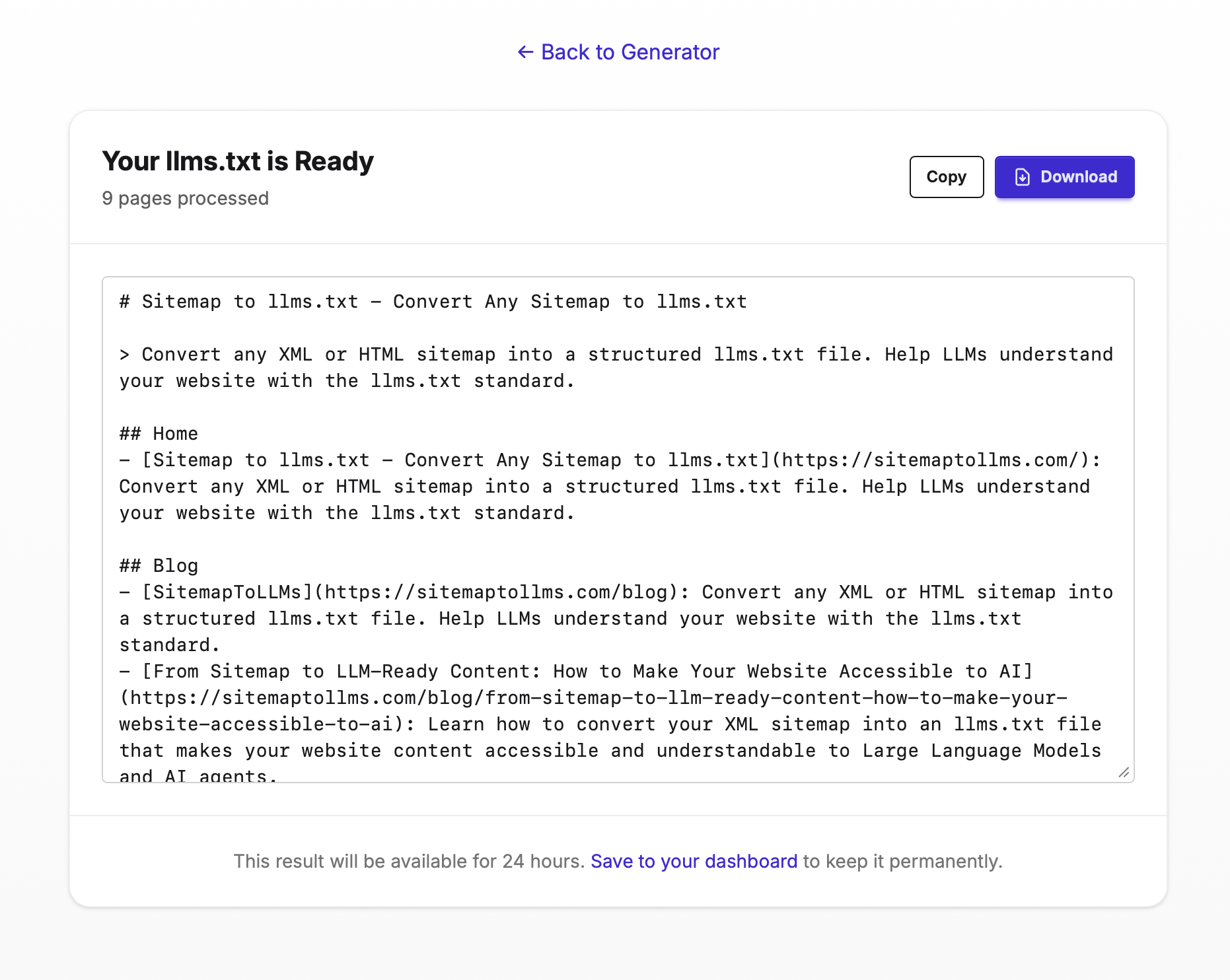

Output Generation

The final llms.txt follows the specification format:

# Site Name

> Brief description of what the site does.

## Section Name

– [Page Title](https://example.com/page): Brief description

## Legal

– [Terms of Use](https://example.com/terms): Terms and conditions

– [Privacy Policy](https://example.com/privacy): Privacy policy

The result is a clean, structured overview that LLMs can parse efficiently.

Automated Regeneration

A static llms.txt file becomes outdated as soon as your content changes.

For Pro sites, SitemapToLLMs supports automated regeneration on a daily, weekly, or monthly schedule. A background scheduler evaluates due sites and dispatches queued jobs to rebuild their files.

Optional email notifications confirm when a new version has been generated.

This ensures your AI-facing structure stays aligned with your actual site.

Technical Stack

SitemapToLLMs is built using:

-

Laravel 12 with queue-based asynchronous job processing

-

Livewire 3 for the interactive dashboard

-

Redis for queue management

-

Stripe (via Laravel Cashier) for per-site subscriptions

-

Postmark for transactional email delivery

The architecture is designed for scalability and non-blocking processing of large sitemap files.

Why This Matters

AI-driven discovery is growing rapidly.

LLMs are increasingly used to:

-

Recommend tools

-

Summarise documentation

-

Answer product questions

-

Compare services

Websites that provide structured, machine-readable signals will be better represented in AI-generated responses.

llms.txt is an early standard — but early standards often define the direction of ecosystems. Just as robots.txt and sitemaps became foundational for search, structured AI-facing files will likely become foundational for machine discovery.

SitemapToLLMs provides a practical, automated way to adopt that standard today.

Unlock the Power of SitemapToLLMs

Transform your website’s interaction with AI by generating your llms.txt file effortlessly. Click below to begin optimizing your content for large language models today!